Spoken Discourse

What do we study?

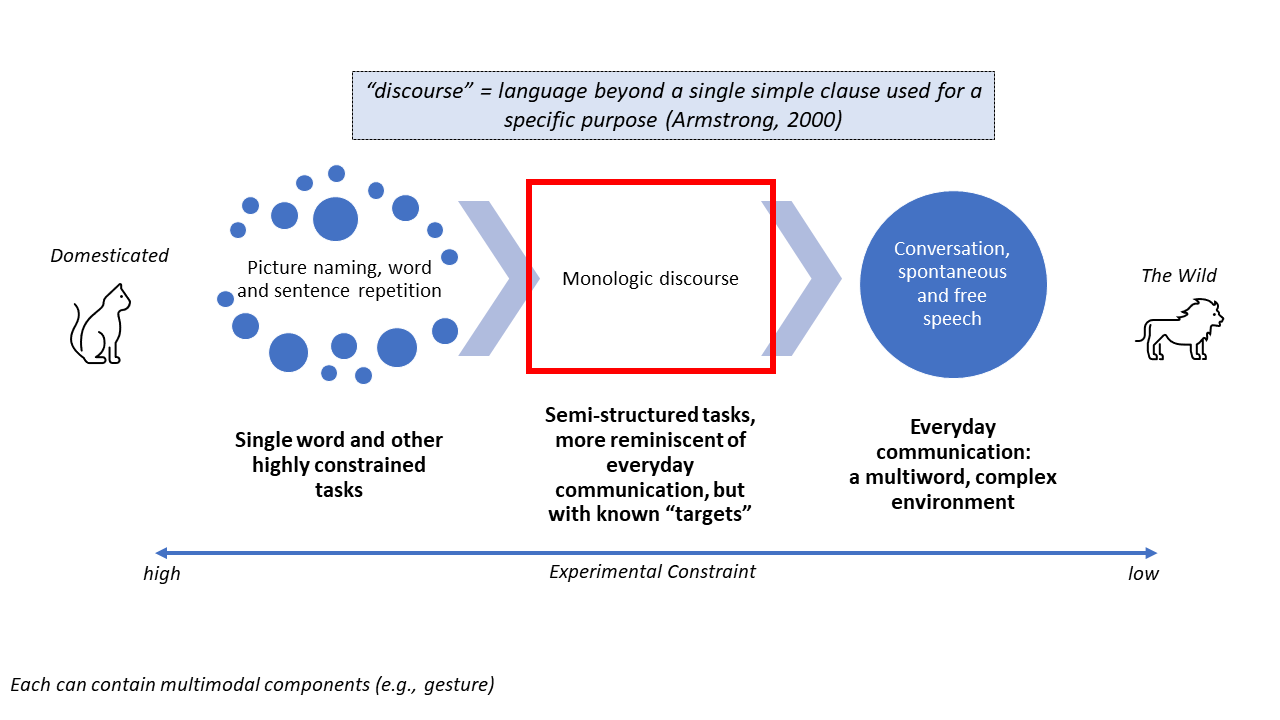

Spoken discourse is language used for a specific purpose, and is speech that goes beyond the sentence level. For example, you might tell a story about what you did last night — that’s spoken discourse! The goal of this research line is to integrate spoken discourse analysis into typical language assessment because, at present, analyzing discourse is not standard in clinical or research. the bulk of language and communication assessment in aphasia. However, there are ethical and scientific issues for characterizing communication when solely relying on standardized (and normed) assessments. Yet, there are many benefits to acquiring and analyzing discourse that foster inclusivity and individual factors. Analyzing discourse enables simultaneous investigation of language structure (phonology, morphology, syntax, semantics, and pragmatics), dimensions of use (form, content, context), and multimodal strategies (gesture, eye gaze), lending a robust and comprehensive view of language and communication. During discourse, individuals with aphasia are enabled to speak on topics that are important to them. This establishes an inclusive and salient means of understanding communication ability. Finally, analyzing discourse may be a more sensitive means of establishing communicative competence across the aphasia severity spectrum. For example, discourse assessment identifies residual, subtle language impairments in the mildest forms of aphasia and then provides critical evidence for the receipt of continued clinical services to these individuals, many of whom want to rejoin the workforce.

Research from our lab

Context and retelling impacts language

How does context play a role in the kind of language elicited during discourse, and how does retelling impact language? Asking these questions aims to improve how we measure discourse change after therapy and over time. Establishing that a measure is reliable across a short time frame is important for sensitively measuring change. Using classical test theory terminology, this is referred to as test-retest reliability. If a measure derived from discourse, like number of words, is shown to have low test-retest reliability, the observed change in number of words after treatment is more likely related to inherent error instead of ‘true’ treatment-induced change. Therefore, establishing test-retest reliability directly improves our ability to detect often-subtle intervention-related spoken discourse changes.

One arm of my research characterizes the complex way that language and task interact during discourse in aphasia. This is important to investigate because discourse is often assessed using a single task or a few tasks, and language metrics are derived from each or summed across tasks (Stark, 2019; Stark & Fukuyama, 2020). Historically, monologue spoken discourse has been elicited across a variety of genres, such as fictional narratives (e.g., Cinderella story), autobiographical narratives (e.g., an important event), picture and picture sequence descriptions, and procedural narratives (e.g., “how to...”). Within these genres are specific tasks; for example, a procedural narrative task to elicit discourse commonly used in aphasia is “tell me how to make a peanut butter and jelly sandwich.” Across two papers employing different statistical methodology, I evaluated commonly used discourse tasks to examine the extent to which each discourse task produced a unique linguistic profile. The data was drawn from AphasiaBank, a large corpus comprising 300+ spoken discourse samples from individuals with aphasia and 200+ samples from individuals without aphasia or brain damage. I identified that each task did indeed produce a unique linguistic profile for individuals with and without aphasia. For example, in the case of the aphasia group, the “how to make a sandwich” procedural narrative elicited more grammatically simple and less propositionally dense language than other tasks (Stark, 2019). In Stark & Fukuyama, 2020, we elaborated on this finding by demonstrating that task effects on language are markedly less apparent in individuals with more severe aphasia, likely because these individuals have limited language available to them and therefore cannot adapt their language to task demands. These findings are important for decision making as it relates to clinical assessment and for research specificity. For eample, if one’s intent is to evaluate the grammatical system of an individual with mild aphasia, analyzing procedural discourse may lend an overly simplistic, incomplete assessment.

Given the finding that task and language interact for most individuals with aphasia, it may also be the case that discourse-extracted measures are reliable for some, but not all, tasks. My current research evaluates the extent to which test-retest language reliability varies across time and task. A completed pilot project (ASHFoundation New Investigator Award) and an ongoing, larger project on which I am Co-Investigator (R01DC008524), have begun to make clear that task has a large impact on the reliability of language during discourse, to a greater extent than factors like aphasia severity and total words produced (Stark et al., 2023; Doub, Hittson & Stark, 2020). To summarize, we have found that individuals with aphasia produce relatively reliable lexical-semantic information (e.g., correct information units) with similar fluency (e.g., words per minute) across discourse tasks. However, some language measures were highly variable across retellings, including syntactic measures, which may have been the result of sampling issues and low word count for some tasks. Importantly, we also identified that test-retest reliability was task-specific. We found that neither sample length nor aphasia severity accounted for a majority of the variance that we observed across tasks from test to retest. As such, we hypothesized that it was likely specifics about each task (e.g., cognitive components, instructions) that mediated the reliability of discourse metrics extracted from it. This work is ongoing and has implications for planning which, and how many, tasks to evaluate to gain a comprehensive and stable view of language ability via aphasic discourse assessment.

Kent Meinert presents a poster at 2025 Clinical Aphasiology Conference introducing our test-retest dataset

Improving evaluation of discourse

An international survey that I spearheaded found that researchers and clinicians endorse a number of barriers precluding discourse analysis, including a lack of time for conducting analyses, inadequate training in discourse-related methodologies and analysis, and a lack of culturally and linguistically diverse discourse stimuli (Stark et al., 2021).

Dr. Sarah Grace Dalton (University of Georgia) and I are re-envisioning the approach to discourse elicitation and analysis to address these barriers. Thanks to funding from the National Aphasia Association, we are co-designing discourse stimuli and procedures in partnership with individuals with aphasia. The goal is to create discourse tasks/elicitation methods that are salient as well as culturally and linguistically appropriate and representative. With seed funding, we are also co-designing a transcription-less discourse analysis tool with speech-language pathologists (SLPs) for live scoring of discourse collected during clinical assessment. These projects will create multiple opensource tools that improve discourse analysis of aphasic speech in both research and clinical settings.

A demonstrated need in the aphasia discourse community is bringing together interdisciplinary experts. In 2019, I co-founded a working group, FOQUSAphasia (FOcusing on the QUality of Spoken discourse in Aphasia; www.foqusaphasia.com), which I presently co-oversee with Dr. Manaswita Dutta (Stark et al., 2020). The goal of FOQUSAphasia is to provide a free networking space for interdisciplinary researchers and clinicians to discuss discourse in aphasia. A major initiative of the FOQUSAphasia team was to invite both early career and established researchers and clinicians to give lectures and workshops related to spoken discourse in aphasia, as well as in related neurogenic communication disorders. We have hosted many free lectures and workshops, all archived on our YouTube channel (@foqusaphasia7483). We also host weekly virtual writing groups which serve to not only protect writing time but also to facilitate networking among like-minded colleagues (15-20 members per semester). FOQUSAphasia is gaining international traction, demonstrated by an invitation to write a chapter entitled “FOQUSAphasia: An initiative to facilitate research of spoken discourse in aphasia and its translation into improved evidence-based practice for discourse treatment” for the 2023 Springer book edited by Dr. Anthony Kong, Spoken discourse impairments in the neurogenic populations: A state-of-the art, contemporary approach. I created and moderated a symposium at the Academy of Aphasia in 2021 that highlighted research from members of FOQUSAphasia. With FOQUSAphasia members, I spearheaded the creation of a set of best practice guidelines for publishing about spoken discourse research in aphasia, to enhance the overall quality of ongoing research by improving replicability, enabling meta-analysis, and supporting implementation in clinical practice (Stark et al., 2022).

Resources for spoken discourse can be found:

https://osf.io/rqnp4/ - Supplemental material for Stark, 2019

https://osf.io/cth89/ - Supplemental material for Stark et al., 2021, survey

https://osf.io/y48n9/ - Best practices guidelines for reporting on spoken discourse

https://osf.io/4zcpn/ - Materials for the test-retest 2024 publication

Multimedia presentations on this topic

Funding

Thank you to the following for supporting this research!